Can AI solve a wicked problem?

With the incredible advances now seen in AI, to what extent can computer generated input support in ‘solving’ wicked problems?

19 September 2023

Whether we like it or not, the world is facing a growing number of wicked problems – problems where there is no obvious route to resolution, and no agreement on the core challenges. Such complex situations, where the boundaries, impact and resources are often constantly moving, all require the input and behaviour change that can only come from a human centred approach. An approach where conversations are open, conflict is explored and the search for definition and solution is inherently ongoing. In this soft systems methodology, we need to encourage diversity and inclusion, transformation and implementation, as well as collaboration and competition. But even with the incredible advances now seen in AI, to what extent is it really possible that computer generated input can actually support in ‘solving’ such wicked problems?

Increasingly, we are bombarded by a growing number of problems that often seem impossible to solve, creating a culture of challenge, unacceptance, and anxiety. When faced with issues such as climate change, nuclear power, global poverty and, increasingly, the ethics of using AI, it is difficult to remain impartial. Yet trying to remain current on these issues is proving continually problematic, meaning our views and understanding are ever being swept along and drowned in the torrent of media and the academics’ need to publish or perish.

This is because issues such as climate change and global poverty are wicked problems. They are inherently so complex and evolving, that it becomes near impossible to untangle the web of information into manageable parts. They are built of so many interconnected pieces, that they lack clarity and boundaries, and (possibly most concerning) are exclusive, so cannot rely on previous experience or principles to enable a solution. As the problem is so broad, there is often an inability to fully grasp what the issue and its scope actually is. Without this understanding, wicked problems lack clear objectives – there can be direction and improvements but no way of confirming success. And given that wicked problems are bound by real-world controls and restraints, even implementing those incremental developments is fraught with risk and hard won against policy, budget, and, particularly, human behaviour.

Given their complex and subjective nature, ‘… we are all beginning to realize that one of the most intractable problems is that of defining problems (of knowing what distinguishes an observed condition from a desired condition) and of locating problems (finding where in the complex causal networks the trouble really lies). [1], meaning that even framing the problem is problematic.

Whilst many have tried to define a wicked problem, their size and behaviour makes this complex in its own right, but key commonalities include:

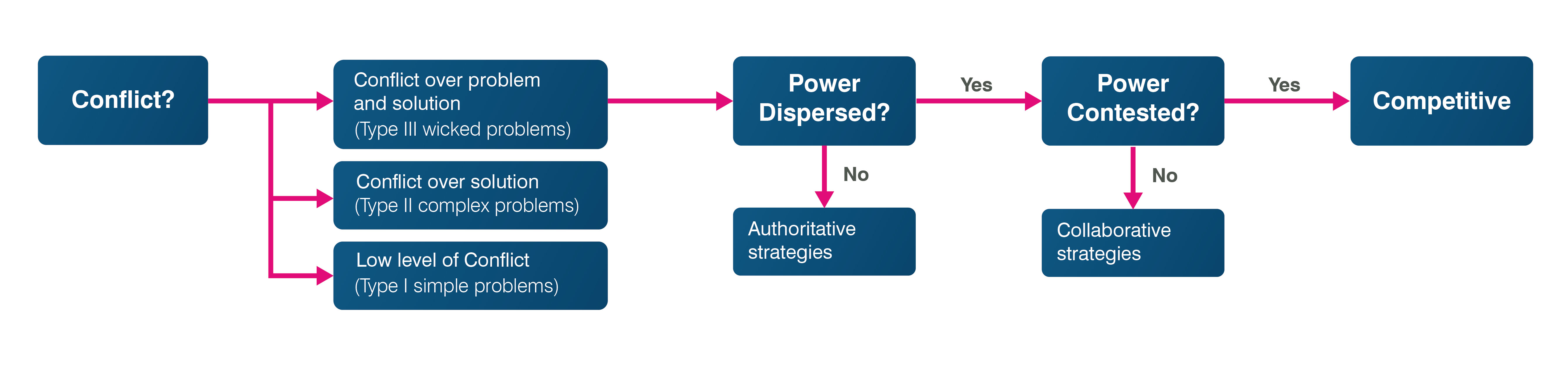

When looking at how to approach a wicked problem, Roberts (2001) discussed three separate ‘coping strategies: authoritative, competitive, and collaborative.’ [2]

Picking a strategy is based on the level of conflict and delegation of power, but the key to success for each is based on having the right people in the room. Therefore, it is clear to see that, whilst technology may be able to play a supportive role in these approaches, each one is fundamentally reliant on the discussion, assessment, and decision-making process that only a human can perform.

With the more multifaceted, wicked type problems, where there are no formulas, metrics, or previous data to enable a level of autonomy, and the mechanisms for dealing with this are based on harnessing the right people skills, then it must be agreed that people are at the root of complex problem solving. This could be extended to encompass scenarios that require a complex network of stakeholders, have unknown entities, are based on developing data/technologies, or rely on behaviour change, such as business transformation.

The fundamental differences that enable humans to work through solutions revolve around their ability to think critically, subjectively, outside of constructs and encourage diversity and ‘contributory dissent’ [3]. Not only in terms of complex problem solving, but also in wider business success, is diversity shown to have a very tangible impact. The ability for companies to not just show support, but harness and use their diversity and inclusion from within its employees can have significant increases financially and competitively [4].

So, if companies can only create an agile and diverse enough environment to tackle increasing change and wicked problems through the implementation of the right people, then to what extent can we really expect AI to transform the solutioning of complex problems? It is important to not jump to the assumption that teams will be fully replaced by technology, but even with AI in a supporting role, questions still need to be addressed. Ultimately, businesses need diversity in order to make holistic, whole scale decisions but risk this ability with the inclusion of new technology.

Work has been done to look at the balance between computers and humans in solving various aspects of business functions [5] but shows that, at one end of the scale, fully enclosed autonomous systems are difficult to manage and need a degree of human intervention for assurance, and at the other end, even where AI is only processing the data to support the human decision-making, it still needs close monitoring to check how it is processing the information and not just what it generates. Whilst the focus here is AI (and for this purpose, AI will encompass all ‘computer science and robust datasets, to enable problem-solving’(10) so as not to unnecessarily reduce the field) and not autonomy in its ability to address wicked problems, it is clear that the range from generative to cognitive computing all still require a degree of human involvement. So, the difficulty becomes finding the right split between AI and human intelligence when dealing with wicked problems.

As research suggests, the key to solutioning wicked problems is the human ability to respond in diverse, subjective and (to an extent) divergent ways, taking into consideration the immediate environment and the wider context. However, there are questions over current AI capability to recreate this innovation and creativity [6] [7]. And conversely, there are growing concerns that through overuse and reliance on AI, humans will lose that capability too – people will eventually stop thinking for themselves and everyone will respond with the same formulated outputs [8].

For AI to be able to successfully support in complex, soft system problem solving, the tools would have to be able to process the data at a human level and speed, but work in conjunction with humans so as to not reduce the creativity and diversity required in these situations.

But as it stands, ‘AI still has a long way to go in making the ultimate decisions in real-world life situations that require more holistic, subjective reasoning. […] We still need humans in the middle to assess the value of insights and decisions to the welfare of humans, businesses and communities.’ [9]

One particular concern is the ability for AI to not yet fully appreciate the subjectivity of wicked problems and risk building in bias, tainted data or incorrectly prioritised objectives. Ultimately, AI doesn’t have the capacity to process at the level needed to see these problems through from framing and conceptualisation to solution, and there are serious ethical issues about whether humans have yet to find or understand the appropriate balance between machines and human intelligence.

Considering the incredible advances in technology, it would be remis to assume that whilst AI does not have the capability to solve today’s wicked problems, that it will not be able to support us in doing so in the future. As with any aspect of technology, businesses need to leave capacity to explore and embed new technologies, for fear of not fully exploiting the possibilities and advancements, and being on a constant loop of business transformation each time practices are forced to change.

Ultimately, while AI may not have the skills to solve wicked problems right now, they can support the process, being a co-pilot to collate and collect information feeding into the human user. Even with future developments, we should be very aware of letting machines impose too much into the traits that make humans unique and instead unlock the real power with human-machine teaming.

Do you have a wicked or complex problem which needs solving? Get in touch with us to see how our brilliant people can help you.

1] Dilemmas in a general theory of planning (epa.gov)

[2] (PDF) Wicked Problems and Network Approaches to Resolution (researchgate.net)

[3] Embracing the obligation to dissent | McKinsey

[4] Diversity wins: How inclusion matters (mckinsey.com)

[5] Managing AI Decision-Making Tools (hbr.org)

[6] AI And Consciousness: Could It Become 'Human'? (forbes.com)

[7] It's time to accept AI will never think like a human – and that's okay | BBC Science Focus Magazine

[8] Human Borgs: How Artificial Intelligence Can Kill Creativity And Make Us Dumber (forbes.com)

Kathryn is a consultant based in the Bath office, having joined BMT in 2022. She is currently working within Training Consultancy Services but has also had roles within Research and Development and Management Consultancy Capability.

She joined BMT with 18 years’ experience in the education sector, including time as an English Teacher, Project Officer for the Mayflower Commemorations and a Learning and Development Advisor for the Royal Navy.

Kathryn has an interest in STEM initiatives, and supports the creation of STEM activities within BMT. She has also supported in additional pieces of work, including an internal consulting campaign, agile conference, Horizon Scanning articles and Thought Leadership and Insight papers.

Chloe Yarrien

Chloe Yarrien discusses how Maritime Autonomous Systems (MAS) are not capable of solving every problem and meeting every need simultaneously, but they can be very useful and effective for certain tasks and applications.

N/A

We have recently attended the IMarEST Engine as a Weapon event where we delivered industry-leading talks on a range of topics, from Autonomous Vessels to Digital Twin in Ship Design.

Leigh Storer

This paper, available as a download, looks at the intricacies of trust within the public sector and presents BMT’s four key enablers to building trust in the public sectors’ transformation initiatives.

James Calver

Our Naval Architects speak to RINA Ship and Boat International about how we can assist our clients early on with Future Fuels for their next vessel design.